The most powerful AI supercomputing platform with end-to-end capabilities

Designed Specifically for the Integration of Simulation, Data Analytics, and AI: The processing of massive datasets, growth of model sizes, and complexity of simulations demand multiple GPUs with incredibly fast connections and a software stack that is fully optimized for acceleration.

The NVIDIA HGX™ AI supercomputing platform combines the full capability of NVIDIA GPUs, NVIDIA NVLink®, NVIDIA InfiniBand™ networking, and a fully optimized NVIDIA AI and HPC software stack from the NVIDIA NGC™ catalog to achieve the highest level of performance for applications.

With its all-around performance and adaptability, NVIDIA HGX™ allows researchers and scientists to merge simulation, data analytics, and AI to advance scientific discovery.

Unrivaled End-to-End Accelerated Computing Platform

NVIDIA HGX™ integrates NVIDIA A100 Tensor Core GPUs with high-speed connections to create the world's most powerful servers. With 16 A100 GPUs, HGX has up to 1.3 terabytes of GPU memory and over 2 terabytes per second of memory bandwidth for unparalleled acceleration.

Compared to previous generations, HGX offers up to a 20X acceleration for AI with Tensor Float 32 (TF32) and a 2.5X boost for HPC with FP64. NVIDIA HGX™ delivers a phenomenal 10 petaFLOPS, creating the world's most powerful accelerated server platform for AI and HPC.

Performance Overview

Performance in Deep Learning

The size and complexity of deep learning models are rapidly increasing, requiring a system with substantial amounts of memory, substantial computing power, and fast connections to ensure scalability. With NVIDIA NVSwitch™ providing high-speed all-to-all GPU communication, NVIDIA HGX™ is capable of processing the most advanced AI models.

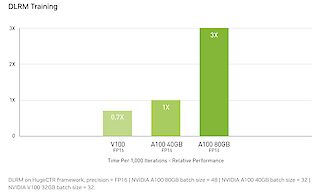

With A100 80GB GPUs, GPU memory is doubled, offering up to 1.3TB of memory within a single NVIDIA HGX™. Emerging tasks that require the largest models, such as deep learning recommendation models (DLRM) which have massive data tables, can be accelerated by up to 3X when using NVIDIA HGX™ powered by A100 40GB GPUs.

Image: Up to 3X Improved AI Training on the Most Complex Models

Performance in Machine Learning

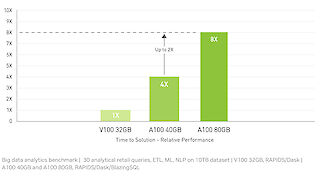

Machine learning models require loading, transforming, and processing vast amounts of data in order to extract important insights. With up to 1.3TB of unified memory and all-to-all GPU communication via NVIDIA NVSwitch™, NVIDIA HGX™ powered by A100 80GB GPUs has the capability to quickly load and process large datasets to generate valuable insights.

On a big data analytics benchmark, A100 80GB provided insights with 2X faster processing compared to A100 40GB, making it ideal for new tasks with rapidly expanding dataset sizes.

Image: 2x Faster than A100 40GB on Big Data Analytics Benchmark

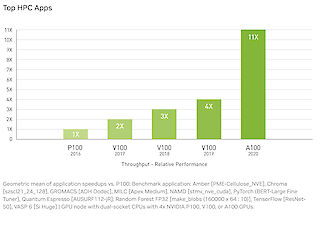

HPC Performance

HPC applications require a large number of calculations per second. By increasing the processing power of each server node, the number of required servers can be significantly reduced, leading to cost, power, and space savings in the data center. For simulations, high-dimensional matrix multiplications necessitate retrieving data from multiple sources for computation, making NVIDIA NVLink™ connected GPUs ideal. HPC applications can also benefit from using TF32 in the A100, resulting in an 11X increase in single-precision dense matrix-multiply operation throughput over the course of four years.

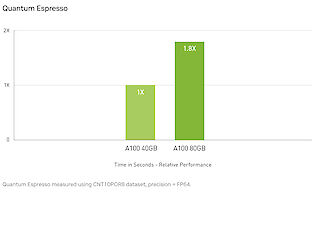

An HGX™ system powered by A100 80GB GPUs can provide a 2X increase in throughput compared to A100 40GB GPUs on Quantum Espresso, a material simulation program, resulting in faster insights.

Get your NVIDIA HGX™ AI Supercomputer!

We're NVIDIA Elite Partner and have all the expertise you need.

We look forward to you!